We are thrilled to announce two major feature additions to our Tensormesh platform. They have been designed to give you greater flexibility in model deployment and unparalleled visibility into your Large Language Model (LLM) performance.

1. Seamless Integration with Hugging Face Public Models

Deploying state-of-the-art LLMs just got dramatically easier. You can now access and deploy from over 300,000+ open-source models directly from the Hugging Face public library.

What does this mean for you?

When you initiate a new deployment, you are no longer limited to our curated list of popular, pre-configured models. During Step 2 of the deployment process—Choose a Model—you can now select one of the public open-source models from Hugging Face.

To get started, navigate to the Models panel and click the Deploy Model button. While selecting your model, remember to consider the size of the LLM relative to your allocated GPU memory size.

For advanced users or those managing secure deployments, if the model you wish to deploy is private, you still have the option to add your HuggingFace Token during the configuration phase. This new integration enhances your ability to quickly provision models and begin your deployment process.

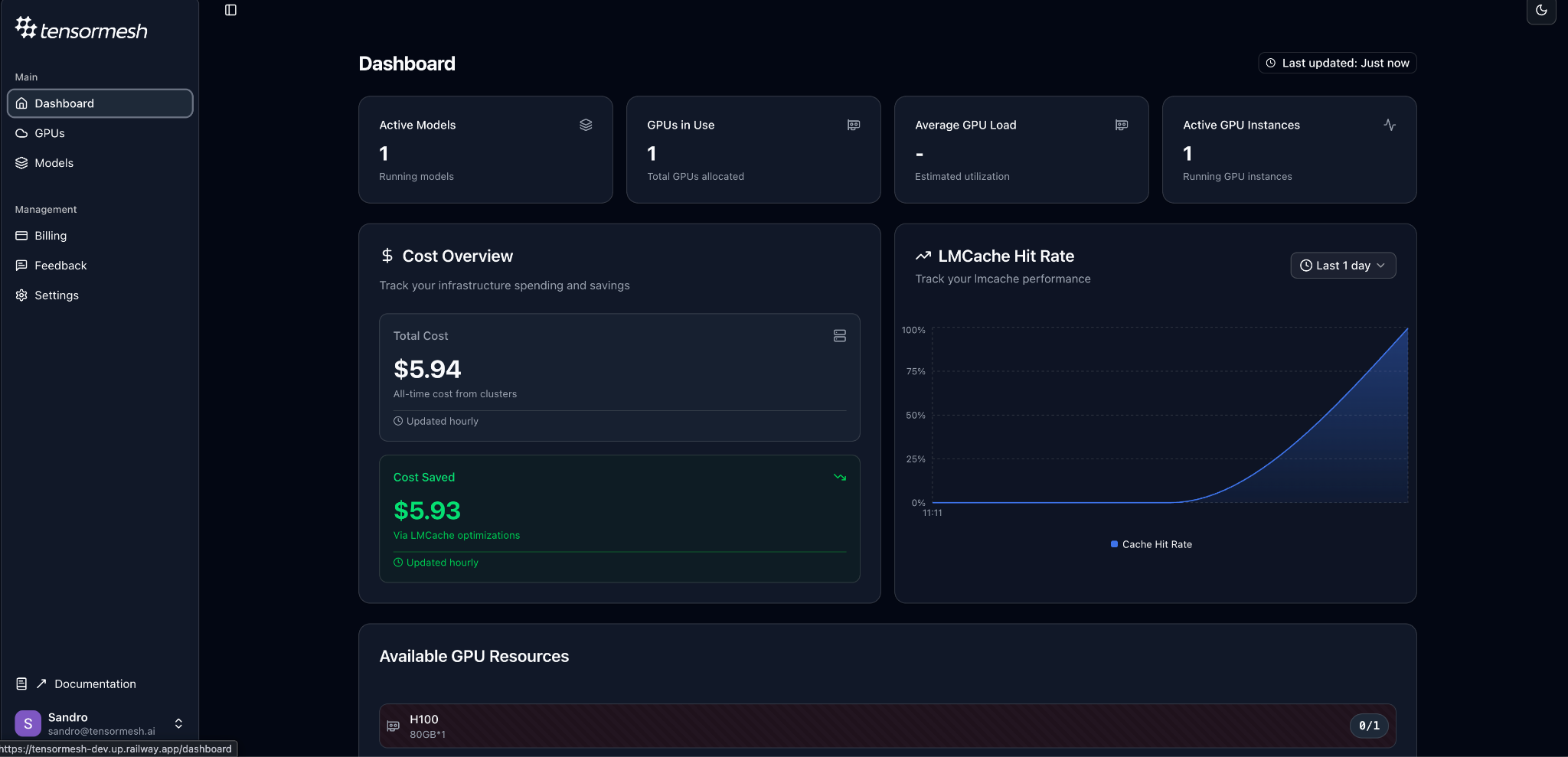

2. Introducing the Performance Metrics Monitoring Dashboard

Visibility is key to optimization. We have added a brand-new panel to your main dashboard that allows you to monitor critical performance metrics of your deployed LLMs. This new monitoring capability gives you the insights necessary to fine-tune resource allocation and ensure optimal user experience.

The dashboard provides monitoring for five essential performance metrics:

This is defined as the time interval from when a user query arrives or the start of the process until the first output token is generated by the model. TTFT is a critical performance metric because it directly impacts the interactive user experience in applications such as chatbots and document analysis.

Inter-Token Latency (ITL)

This metric represents the average delay between the generation of two consecutive output tokens. ITL is a key performance metric primarily concerning the decoding phase of LLM inference.

Cache Hit Rate

Often called the prefix cache hit ratio, this measures the efficiency of the caching system concerning the retrieval of precomputed Key-Value (KV) caches. A cache hit occurs when a segment of an LLM input (e.g., a reused context or prefix) is already stored in the KV cache backend.

Input Throughput

This refers to the rate at which the system can process incoming requests, focusing specifically on the efficiency of handling the input context during the prefill phase. It is frequently measured as the query processing rate or Queries Per Second (QPS). Achieving high input throughput is critical because the computation associated with processing the input is the primary bottleneck for scaling LLM services.

Output Throughput

This refers to the rate at which the system generates tokens for the user. It is usually measured in tokens per second or indirectly reflected in the system's overall QPS. This metric is intrinsically tied to the efficiency of the decoding phase of the LLM inference process.

Understanding these metrics provides granular control over resource optimization. For instance, while input throughput focuses on the prefill phase and is the primary bottleneck for scaling LLM services, output throughput specifically measures the token generation rate tied to the decoding efficiency.

If your LLM deployment is like a high-performance race car, the new performance metrics dashboard is your sophisticated digital dashboard, showing you exactly how fast you accelerate (TTFT), how smoothly you handle the curves (ITL), and how efficiently you are utilizing your fuel (Cache Hit Rate and Throughput), ensuring you can maximize performance and scale effectively.

To try these new features, visit your Tensormesh dashboard!

Are their features that you would like us to add to our product?

Feel free to reach out to us via: