Enterprise AI applications increasingly rely on processing large documents, legal contracts, research papers, financial reports, medical records, and technical specifications. With LLM context windows now reaching 1M+ tokens, these systems promise the ability to feed entire documents into your AI and extract insights at scale.

But there's a problem that most organizations discover too late: Document-heavy AI workloads drain your GPU budget faster than almost any other use case.

The Document Reprocessing Problem

Long-context LLMs were supposed to make document analysis more efficient by eliminating the need for chunking and complex retrieval systems. Instead, they've created a new class of infrastructure challenges that are quietly driving up enterprise AI costs.

Why Document AI Systems Waste GPU Resources

Every document query follows the same expensive pattern:

- Document Upload – Your system loads a 50-100 page document into the LLM

- Initial Processing – The model reads through 50,000-100,000 tokens to build understanding

- Query Processing – User asks a question about specific content

- Generation – The model produces an answer

When the next user asks a different question about the same document, steps 2-4 repeat entirely from scratch.

Let's break down what this means in practice:

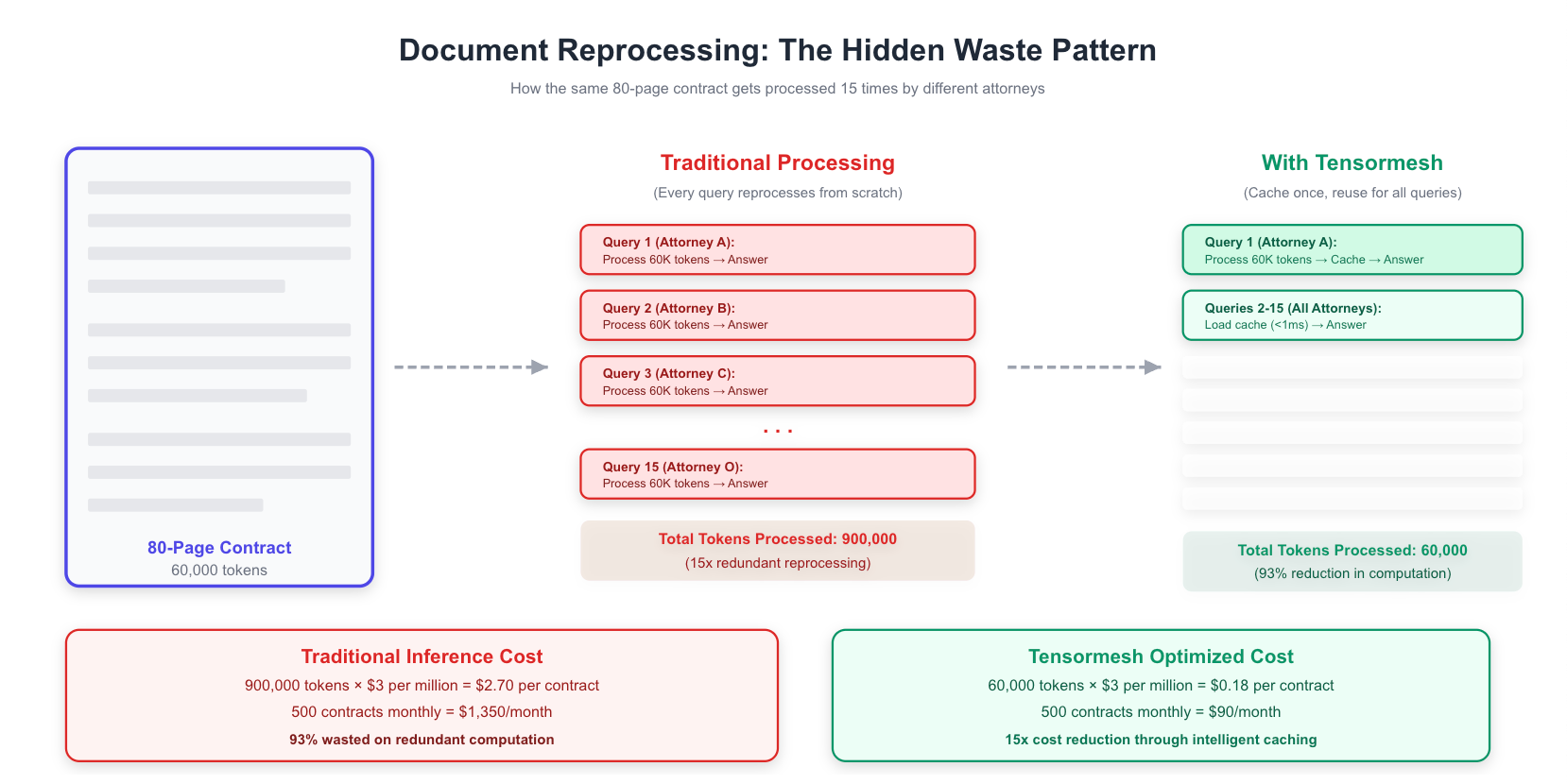

Scenario: Legal Contract Analysis System (Example based on typical deployment patterns)

- Your firm processes 500 contracts monthly

- Average contract length: 80 pages (~60,000 tokens)

- Each contract receives 10-15 queries from different attorneys

- Peak usage: 50 concurrent document sessions

The cost breakdown:

- First query on Contract A: Process 60,000 tokens

- Second query on Contract A (different attorney): Process 60,000 tokens again

- Third query on Contract A: Process 60,000 tokens again

- 15 total queries: 900,000 tokens processed for the same document

Across 500 contracts monthly: 450 million tokens are reprocessed when you should have processed each document just once.

At typical GPU inference costs, you're burning through $15,000-$35,000 monthly just on redundant document reprocessing.

Four Document Processing Bottlenecks Driving Up Your Costs

1. Prefill Latency

Processing a 60,000-token document takes 8-15 seconds before the LLM can even start answering your question. Every user querying that document waits through this prefill phase. For a document that gets 15 queries, that's 2-4 minutes of cumulative wait time while most of it is completely unnecessary.

2. Identical Document, Complete Recomputation

Three analysts reviewing the same quarterly earnings report. Five researchers examining the same medical study. Ten engineers referencing the same technical specification. Traditional inference engines treat each interaction as brand new, reprocessing the entire document from scratch every single time as if they've never seen it before.

3. Peak-Hour Memory Pressure

During business hours, dozens of documents are being analyzed simultaneously. Each document's KV cache (the model's computational "memory" of what it has processed) consumes precious GPU VRAM. When memory fills up, which happens in seconds under production load, caches get evicted.

Analyst returns 10 minutes later to ask a follow-up question about the same document? The cache is gone. Complete reprocessing from page 1.

4. Multi-User Document Access Patterns

Documents don't get analyzed once and forgotten. Legal contracts get reviewed by multiple partners. Research papers get cited across different projects. Financial reports get queried by various departments. Yet each user's interaction triggers full document reprocessing because the previous user's KV cache was evicted minutes ago.

The Real Cost of Document AI at Scale

Here's what enterprise document processing systems actually cost:

The illustration above shows how traditional inference engines reprocess the same document completely for every query, while Tensormesh caches the document once and reuses it across all subsequent queries, reducing computation by 93% and costs by 15x.

Why Traditional Solutions Fall Short

You might think existing optimizations solve this:

Document Chunking? Defeats the purpose of long-context models and breaks semantic understanding across sections.

Summarization First? Loses critical details and nuance, exactly what you need LLMs to preserve in legal, medical, and technical documents.

Smaller Context Windows? Limits the size and complexity of documents you can analyze, creating artificial constraints on your use cases.

Horizontal Scaling? Throwing more GPUs at the problem just multiplies your costs without addressing the core inefficiency of redundant computation.

Common Document AI Workloads Bleeding Money

Think about your organization's document processing patterns. How many times do you reprocess the same content?

High-repetition scenarios:

Legal & Compliance

- Contract review systems where multiple attorneys query the same agreements

- Regulatory document analysis with recurring reference checks

- Due diligence document processing across deal teams

Research & Academia

- Literature review systems citing the same papers repeatedly

- Grant proposal analysis with shared reference documents

- Clinical trial documentation accessed by multiple researchers

Financial Services

- Earnings reports analyzed by different departments

- Regulatory filings referenced across compliance reviews

- Investment memoranda shared among analysts

Enterprise Knowledge Management

- Technical documentation accessed by engineering teams

- Policy handbooks queried by HR and legal

- Product specifications referenced across product and sales

Healthcare & Life Sciences

- Medical records reviewed by multiple specialists

- Clinical guidelines referenced during patient care

- Research protocols accessed across study teams

If multiple users query the same documents repeatedly, you're wasting 5-10x on GPU costs by not optimizing for document cache reuse.

How Tensormesh Transforms Document Processing Economics

Tensormesh was built specifically to solve the computational waste that burdens document-heavy AI workloads. Here's how we fundamentally change the game:

Intelligent Document KV Cache Persistence

Traditional inference engines lose their "memory" (KV cache) of processed documents the moment it leaves GPU VRAM. Tensormesh leverages LMCache, an open-source library created by our founders to persist these document caches in CPU RAM, local SSDs, or shared storage.

What this means for your document AI: When your model processes an 80-page contract the first time, that computation is preserved. The next 14 attorneys querying that same contract don't trigger reprocessing, they instantly reuse the cached document understanding and only process their specific questions.

Automatic Document Recognition

Tensormesh identifies when queries reference the same documents, whether it's the same PDF uploaded multiple times, identical content in your document store, or recurring references to standard templates. This happens automatically, with no changes to your existing document processing pipeline.

Sub-Second Query Response

Instead of 8-15 seconds spent reprocessing documents before answering queries, cached document KV data is retrieved and applied in under a second. Your first query takes the normal prefill time, but every subsequent query on that document is dramatically faster.

5-10x Cost Reduction for Document Workloads

By eliminating 70%+ of redundant document computation, Tensormesh customers typically see:

- GPU costs drop by 5-10x compared to traditional long-context inference

- Latency reduced by 80-90% for repeat queries on the same documents

- Throughput improvements of 3-5x on the same infrastructure

Get Started in Minutes

Tensormesh integrates seamlessly with your existing document processing infrastructure:

- Try Tensormesh – Visit tensormesh.ai and access our beta with $100 in GPU credits

- Integrate Effortlessly – Works with vLLM, LMCache, and your current framework, setup takes minutes

- Watch the Transformation – Immediate visibility into cache hit rates, cost savings, and performance gains

Tensormesh is the advanced AI inference platform that turns computational waste into performance gains through intelligent caching and routing. Built by the creators of LMCache, we're helping AI teams scale smarter, not harder.

Sources and References

This article draws on research and industry analysis from the following sources:

- huggingface.co/blog/tngtech/llm-performance-prefill-decode-concurrent-requests - "Prefill and Decode for Concurrent Requests - Optimizing LLM Performance"

- medium.com/@devsp0703/inside-real-time-llm-inference-from-prefill-to-decode-explained-72a1c9b1d85a - "Inside Real-Time LLM Inference: From Prefill to Decode, Explained"

- aussieai.com/research/prefill - "Prefill Optimization"

- developer.nvidia.com/blog/mastering-llm-techniques-inference-optimization - "Mastering LLM Techniques: Inference Optimization"

- platform.claude.com/docs/en/about-claude/pricing - "Pricing - Claude Docs"

- metacto.com/blogs/anthropic-api-pricing-a-full-breakdown-of-costs-and-integration - "Anthropic Claude API Pricing 2026: Complete Cost Breakdown"

- community.netapp.com/t5/Tech-ONTAP-Blogs/KV-Cache-Offloading-When-is-it-Beneficial/ba-p/462900 - "KV Cache Offloading - When is it Beneficial?"

- github.com/vllm-project/vllm/issues/7697 - "Enable Memory Tiering for vLLM"

All technical claims about Tensormesh's capabilities are based on the provided product documentation and represent typical customer outcomes.