For years, the AI landscape has been dominated by closed-source models from tech giants like OpenAI, Google, and Anthropic. These proprietary systems promised cutting-edge performance but came with high costs, vendor lock-in, and limited transparency. That narrative is rapidly changing. In 2025, open-source and open-weight AI models have emerged not as budget alternatives, but as legitimate and often superior choices for enterprises, developers, and researchers alike.

The numbers tell a compelling story:

- Performance parity: Open-source models routinely achieve 90% or more of the performance of their closed-source counterparts

- Cost advantage: Operational costs up to 87% lower than closed alternatives

- Market opportunity: The AI industry could save approximately $25 billion annually by optimally reallocating demand from closed to open models (MIT/Linux Foundation research)

- Adoption gap: Despite these advantages, open models account for only 20% of current usage, largely due to misconceptions about performance, reliability, and enterprise readiness

This blog examines why open-source AI models have achieved parity with closed systems, where they excel, and how Tensormesh's intelligent caching and routing infrastructure amplifies their advantages, making open-source AI not just viable, but the strategic choice for the next wave of AI innovation.

Performance Parity: Open Source Models Match Industry Leaders

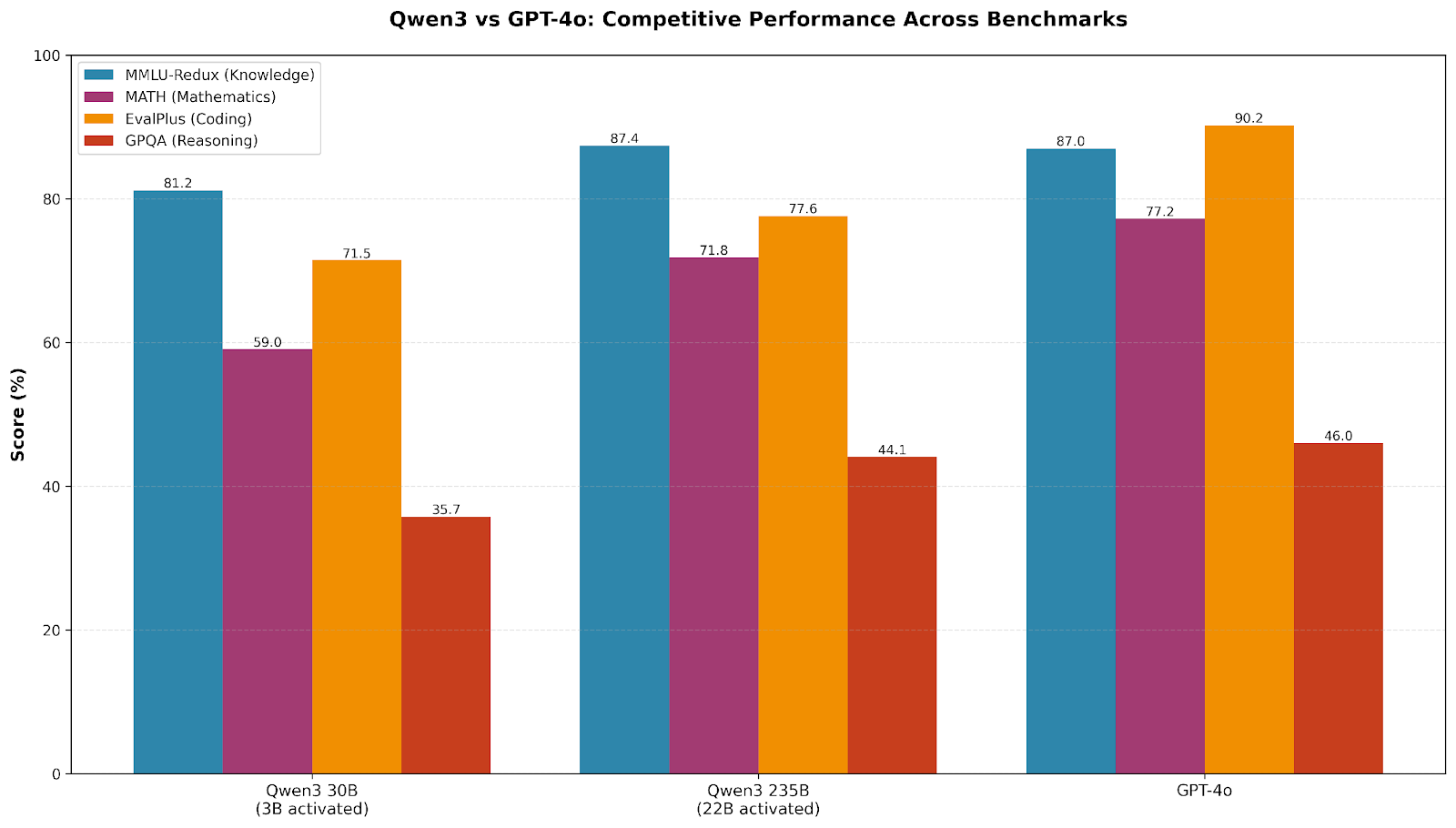

Alibaba's Qwen3 family has emerged as one of the most impressive open-source alternatives to closed models. Available through Tensormesh in multiple configurations, including the flagship 235B parameter model and specialized Qwen3 Coder variants, these models deliver competitive performance across knowledge, reasoning, and coding tasks.

What makes Qwen3 particularly compelling:

- Massive adoption: Qwen models have become the most downloaded open-source models globally, with active community development and transparent practices

- Specialized variants: Qwen3 Coder models (30B and 480B variants available through Tensormesh) excel at software engineering tasks, competing directly with closed alternatives

- Efficient architecture: Advanced optimization techniques deliver strong performance without requiring massive compute resources

- Full control: Deploy on your infrastructure, customize for your domain, and eliminate dependency on external APIs

For enterprises building production AI applications, Qwen3 offers a compelling value proposition: competitive performance, transparent development, and operational flexibility that closed models simply cannot match.

Figure 1: Qwen3 achieves competitive performance against GPT-4o across key benchmarks

The Specialized Models Advantage

Beyond general-purpose models, the open-source ecosystem has produced specialized alternatives that excel in specific domains. DeepSeek-Coder V2, with up to 236 billion parameters, competes directly with Claude and GPT for code generation tasks. Qwen's family of models has achieved massive adoption, becoming the most downloaded local models globally, thanks to their transparency and active community engagement. Meanwhile, Mistral's mixture-of-experts architecture delivers performance rivaling much larger models while maintaining efficiency advantages.

Cost Efficiency: The $25 Billion Opportunity

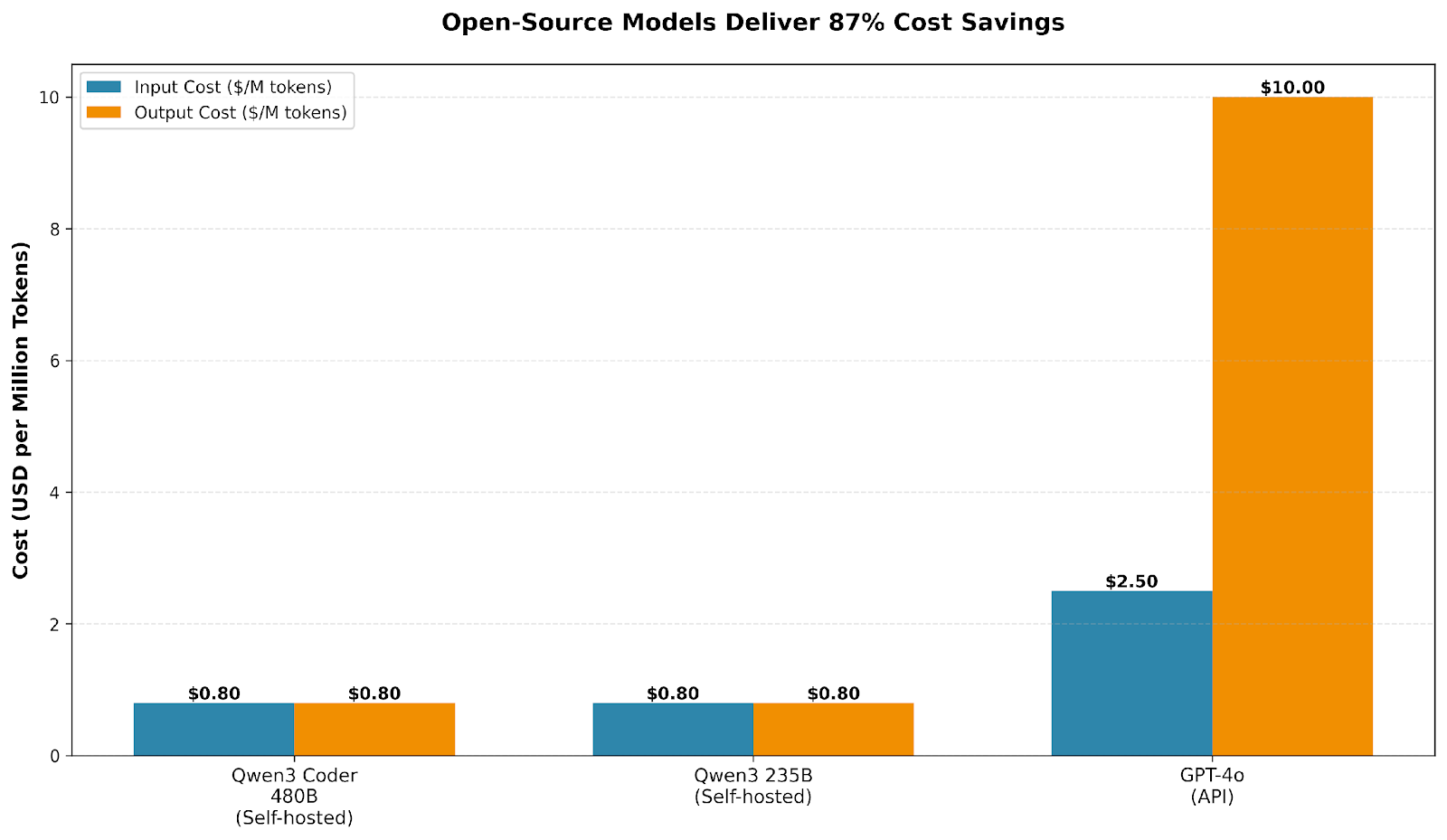

The economic advantages of open-source models are transformative. OpenAI's GPT-4o typically charges $2.50 per million input tokens and $10.00 per million output tokens. Open-source models eliminate per-token charges entirely after infrastructure investment, while hosted open-source options offer dramatic savings.

Recent MIT research by Frank Nagle and Daniel Yue found that the global AI economy could save approximately $25 billion annually through optimal substitution to open models. Their analysis of OpenRouter usage showed:

- Open models achieve ~90% of closed model performance

- Open models cost 87% less than closed alternatives

- Yet closed models account for 80% of usage

This represents a massive market inefficiency driven largely by deployment complexity, exactly the problem Tensormesh solves.

Figure 2: Open-source models deliver 87% cost savings compared to closed alternatives

Breaking Down the Economics

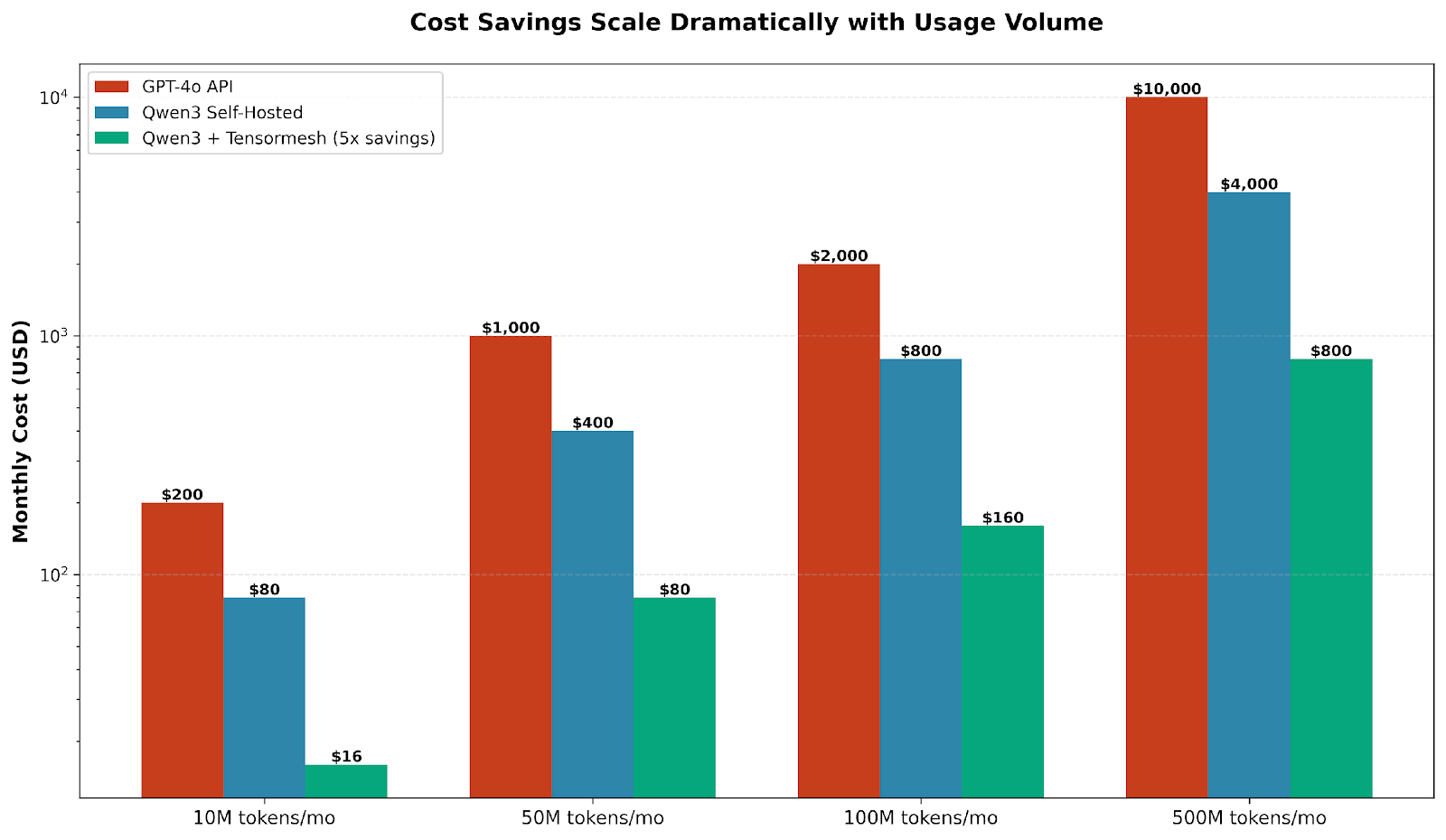

For enterprises processing 100+ million tokens monthly, common for customer service chatbots, code assistants, or document processing, the savings become substantial:

- 100M tokens/month: Save ~$1,700 monthly ($20,000+ annually) vs GPT-4o

- 500M tokens/month: Save over $100,000 annually at enterprise scale

Figure 3: Tensormesh amplifies open-source savings with 5-10x GPU cost reduction

Strategic Advantages Beyond Cost

Open-source models offer benefits that extend far beyond economics:

- Data sovereignty: Deploy on-premises or in private clouds for complete data control, critical for regulated industries

- Customization: Full access to weights and architecture enables domain-specific optimization and fine-tuning

- No vendor lock-in: Eliminate vulnerability to pricing changes, policy modifications, or service degradation

- Transparency: Understand exactly how your models work, inspect training data, and audit model behavior

For organizations building critical AI infrastructure, these advantages can be as important as the cost savings.

How Tensormesh Amplifies Open Source Advantages

While open-source models deliver impressive performance and cost advantages, deploying them at scale introduces challenges: managing distributed inference, optimizing latency, and controlling GPU costs. This is where Tensormesh's intelligent caching and routing infrastructure becomes transformative.

5-10x GPU Cost Reduction Through Intelligent Caching

Tensormesh's caching eliminates redundant GPU inference by serving similar queries from cache, reducing costs by 5-10x while maintaining response quality.

Real-World Example (100M tokens/month):

- Self-hosted without caching: $6,000-8,000

- With Tensormesh: $1,200-1,600

- GPT-4o API: $20,000+

The combination of open-source economics and intelligent caching creates compound savings that fundamentally change AI inference costs.

Sub-Second Latency for Production Workloads

Tensormesh delivers sub-second routing and cache retrieval with optimized inference for Qwen3 models. The result: open-source deployments that match or exceed closed model latency while maintaining cost advantages.

Critical for real-time applications like conversational AI, code completion, and interactive assistants, enterprises get both superior economics and production-grade performance.

Access to 300,000+ Models Through Hugging Face Integration

Tensormesh integrates directly with Hugging Face's entire model library, giving you access to the world's largest open-source AI ecosystem:

Featured Models We Optimize For:

- Qwen3 235B (22B activated) - Flagship reasoning model

- Qwen3 Coder 30B & 480B - Specialized coding models

- OpenAI GPT models - Familiar closed alternatives

Plus Access to Thousands More:

- Llama, Mistral, DeepSeek models

- Specialized domain models (legal, medical, financial)

- Multilingual models for global applications

- Emerging cutting-edge research models

The trajectory is clear, as open-source AI will reshape the industry through community innovation, transparency, and superior economics. The competitive advantage goes to organizations who adopt early. For teams ready to reduce costs, eliminate vendor dependency, and future-proof their AI stack, the window is open.

Sources and References

1. Qwen Team. (2025). "Qwen3 Technical Report." arXiv:2505.09388. https://arxiv.org/abs/2505.09388

2. Qwen Team. (2025). "Qwen3: Think Deeper, Act Faster." Qwen Blog. https://qwenlm.github.io/blog/qwen3/

3. Nagle, F. & Yue, D. (2026). "AI open models have benefits. So why aren't they more widely used?" MIT Sloan. https://mitsloan.mit.edu/ideas-made-to-matter/ai-open-models-have-benefits-so-why-arent-they-more-widely-used

4. Nagle, F. & Yue, D. (2025). "The Latent Role of Open Models in the AI Economy." SSRN. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5767103

5. OpenAI. (2024). "GPT-4o Technical Report." https://openai.com/index/gpt-4o-system-card/

6. IT Pro. (2025). "Open source AI models are cheaper than closed source competitors and perform on par." https://www.itpro.com/software/open-source/open-source-ai-performance-cost-savings-proprietary-models-linux-foundation