AI companies hit a brutal wall when trying to scale. Processing requests efficiently while maintaining throughput and low latency presents significant challenges. This is particularly shown through achieving resource efficiency and maximizing GPU utilization. The data reveals the severity:

- The majority of organizations achieve less than 70% GPU Allocation Utilization when running at peak demand

- Most AI accelerators, including top chips from NVIDIA and AMD, run under 50% capacity in AI inference

- Deployment complexity is the top challenge, with 49.2% of respondents citing it as a major hurdle, while GPU availability and pricing affect 44.5% of respondents

This throughput bottleneck doesn't just slow systems down, it actively prevents companies from scaling their AI applications to meet user demand. Today's systems won't scale in both requirements and cost.

Why Current Scaling Solutions Fail at Production Scale

The Memory Bandwidth Wall

Organizations throw more hardware at the problem, but fundamental architectural limitations remain. Large-batch inference remains memory-bound, with most GPU compute capabilities underutilized due to DRAM bandwidth saturation as the primary bottleneck. Even with massive GPU investments, LLM inference at smaller batch sizes hits a wall as model parameters can't load from memory to compute units fast enough.

The implications are severe:

- Each server needs hundreds of GB to serve models, and memory speed directly limits how many users you can support.

- Syncing data between GPUs can eat 30% of your total latency.

- Bigger batches eventually stop helping, especially with smaller models.

The GPU Utilization Disaster

The economics are even worse than the technical challenges. When asked about peak periods for GPU usage, 15% of respondents report that less than 50% of their available and purchased GPUs are being utilized. Traditional solutions like batch processing, model quantization, and edge deployment treat symptoms rather than addressing the core issue: most AI workloads contain massive redundancy that existing architectures repeatedly recalculate.

Continuous batching minimizes GPU idle time by concurrently processing tokens from multiple requests, grouping tokens from different sequences into batches to significantly improve GPU utilization and inference throughput. However, this approach increases individual user latency by creating a painful trade-off between throughput and responsiveness that organizations shouldn't have to make.

The Breakthrough: Intelligent Caching at Scale

The Real-World Impact: Throughput That Actually Scales

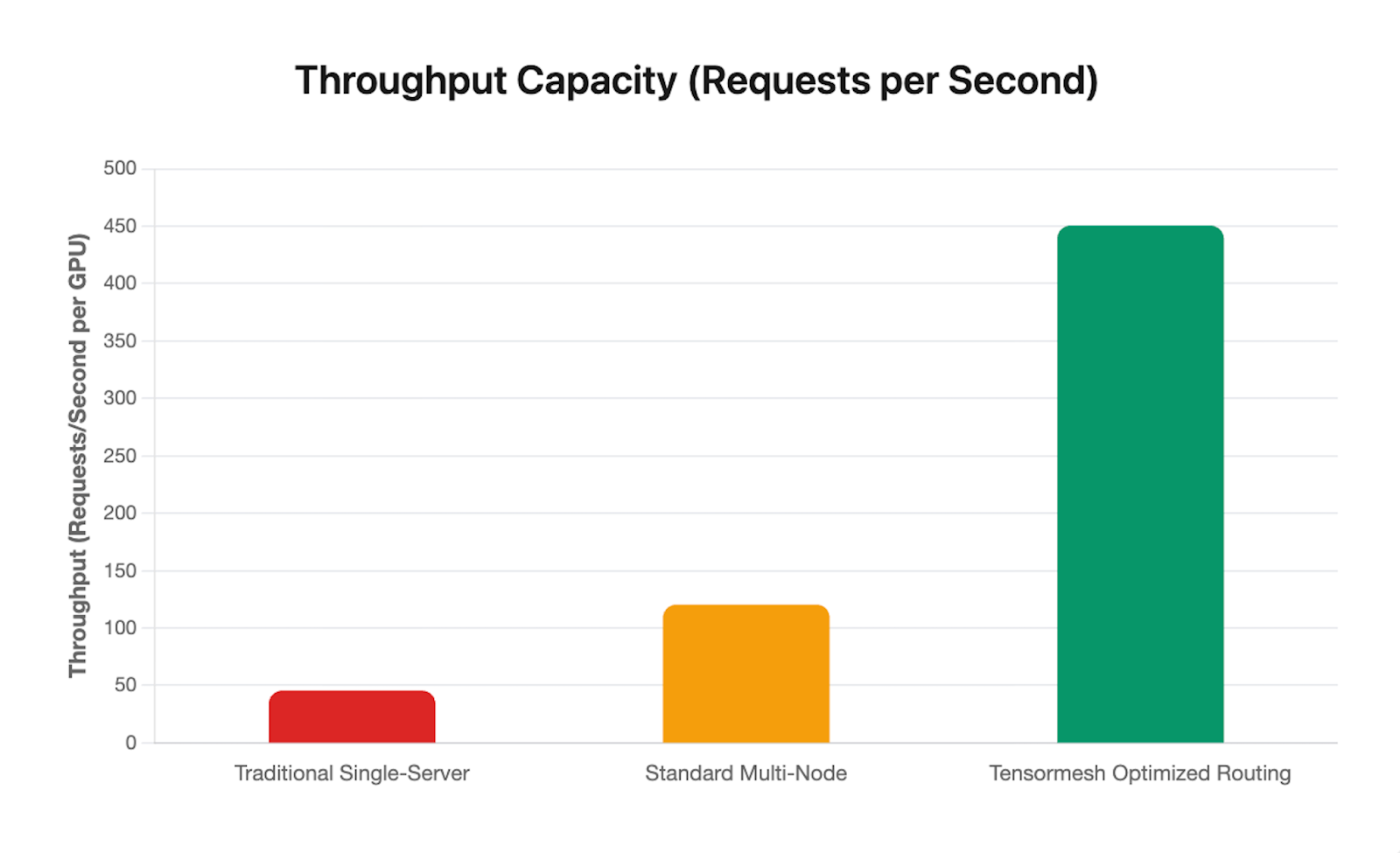

The difference between traditional architectures and Tensormesh’s becomes dramatic under real production loads. Single-server deployments hit throughput ceilings around 40 requests per second per GPU. Standard multi-node setups improve this to roughly 115 requests per second, but still waste resources on redundant computations.

Figure 1: Throughput Capacity Comparison Across Infrastructure Types

Tensormesh's optimized routing with distributed cache sharing delivers 450+ requests per second per GPU, nearly 4× the throughput of standard multi-node deployments and 11× that of traditional single-server approaches. This isn't just incremental improvement, it's the difference between infrastructure that scales and infrastructure that collapses.

Get Started with Tensormesh in 3 Simple Steps with $100 in GPU Credit

Step 1: Evaluate your existing inference costs, GPU utilization, and throughput limitations. Identify where redundant computations are costing you performance and budget.

Step 2: Deploy Tensormesh Visit www.tensormesh.ai to access our platform. Integration requires minimal configuration. Plus, new users can receive $100 GPU Credits to test Tensormesh.

Step 3: Scale with Confidence Use Tensormesh's observability tools to track throughput improvements, cost reductions, and cache efficiency. As your inference demands grow, Tensormesh automatically optimizes resource allocation for consistent performance at any scale.

The companies solving throughput now will serve more users with improved response times, deploy more sophisticated models, and build sustainable competitive advantages all while spending less on infrastructure.

Ready to break through the throughput ceiling? Visit www.tensormesh.ai to claim your $100 in Free GPU Credits and start scaling your AI infrastructure.

Sources

- Engineering at Meta - Scaling LLM Inference: Innovations in Tensor Parallelism, Context Parallelism, and Expert Parallelism https://engineering.fb.com/2025/10/17/ai-research/scaling-llm-inference-innovations-tensor-parallelism-context-parallelism-expert-parallelism/

- BentoML - 2024 AI Inference Infrastructure Survey Highlights https://www.bentoml.com/blog/2024-ai-infra-survey-highlights

- ClearML - The State of AI Infrastructure at Scale 2024 (PDF Report) https://ai-infrastructure.org/wp-content/uploads/2024/03/The-State-of-AI-Infrastructure-at-Scale-2024.pdf

- ClearML Blog - Download the 2024 State of AI Infrastructure Research Report https://clear.ml/blog/the-state-of-ai-infrastructure-at-scale-2024

- arXiv - Mind the Memory Gap: Unveiling GPU Bottlenecks in Large-Batch LLM Inference https://arxiv.org/html/2503.08311v2

- Databricks Blog - LLM Inference Performance Engineering: Best Practices https://www.databricks.com/blog/llm-inference-performance-engineering-best-practices

- NVIDIA Research (via SemiEngineering) - Efficient LLM Inference: Bandwidth, Compute, Synchronization, and Capacity are all you need https://semiengineering.com/llm-inference-core-bottlenecks-imposed-by-memory-compute-capacity-synchronization-overheads-nvidia/

- Modal Blog - 'I paid for the whole GPU, I am going to use the whole GPU': A high-level guide to GPU utilization https://modal.com/blog/gpu-utilization-guide

- Hyperbolic - How to Check GPU Usage: A Simple Guide for Optimizing AI Workloads https://www.hyperbolic.ai/blog/how-to-check-gpu-usage-a-simple-guide-for-optimizing-ai-workloads

- NeuReality - The 50% AI Inference Problem: How to Maximize Your GPU Utilization https://www.neureality.ai/blog/the-hidden-cost-of-ai-why-your-expensive-accelerators-sit-idle

- arXiv - Efficient LLM Inference: Bandwidth, Compute, Synchronization, and Capacity are all you need https://arxiv.org/html/2507.14397v1

- InfoQ - Scaling Large Language Model Serving Infrastructure at Meta https://www.infoq.com/presentations/llm-meta/