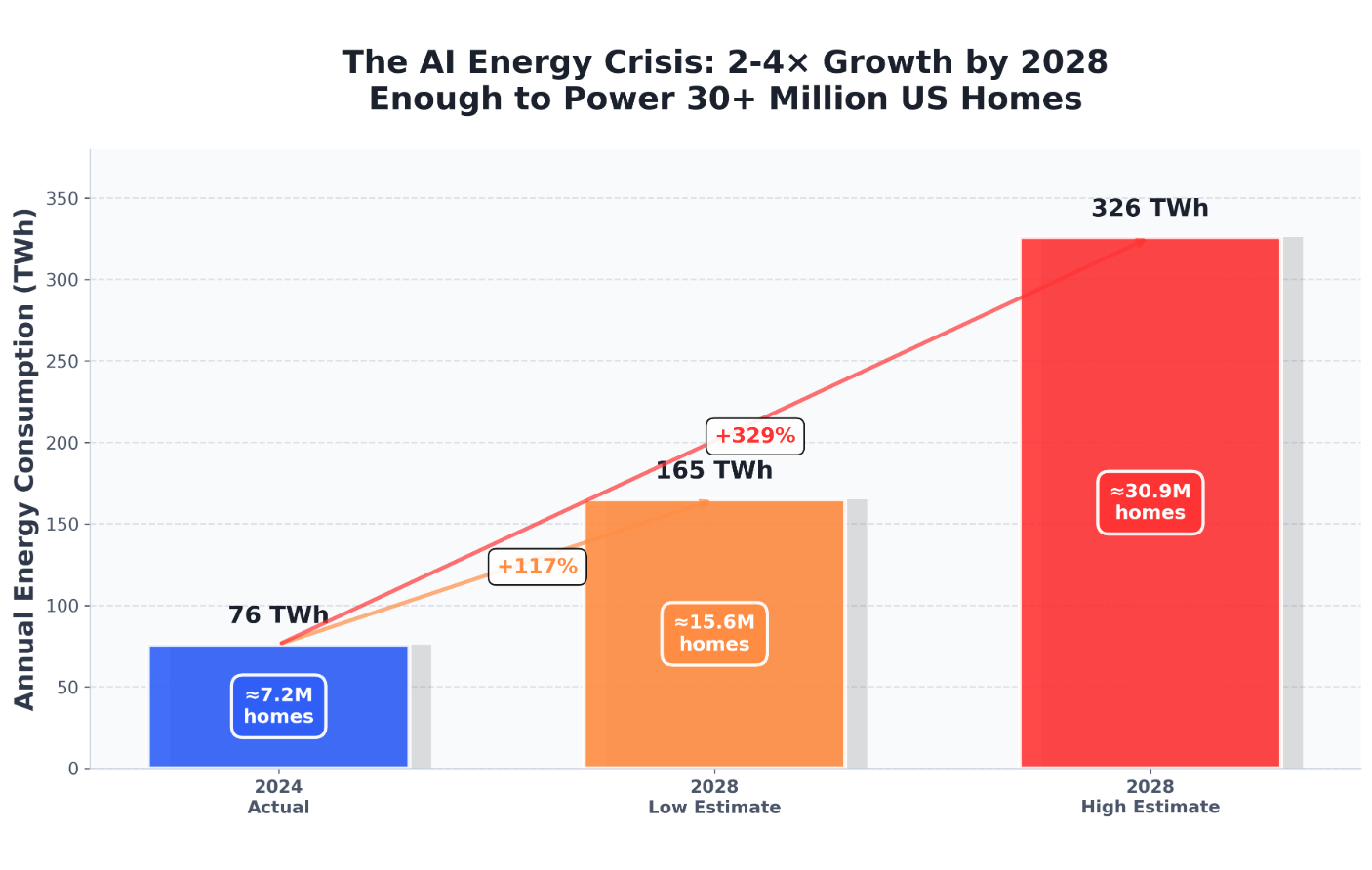

The AI inference market is exploding from $106 billion in 2025 to $255 billion by 2030, but this growth comes with costs that threaten to derail the entire industry. Energy demands are growing faster than infrastructure can support. The data reveals the severity:

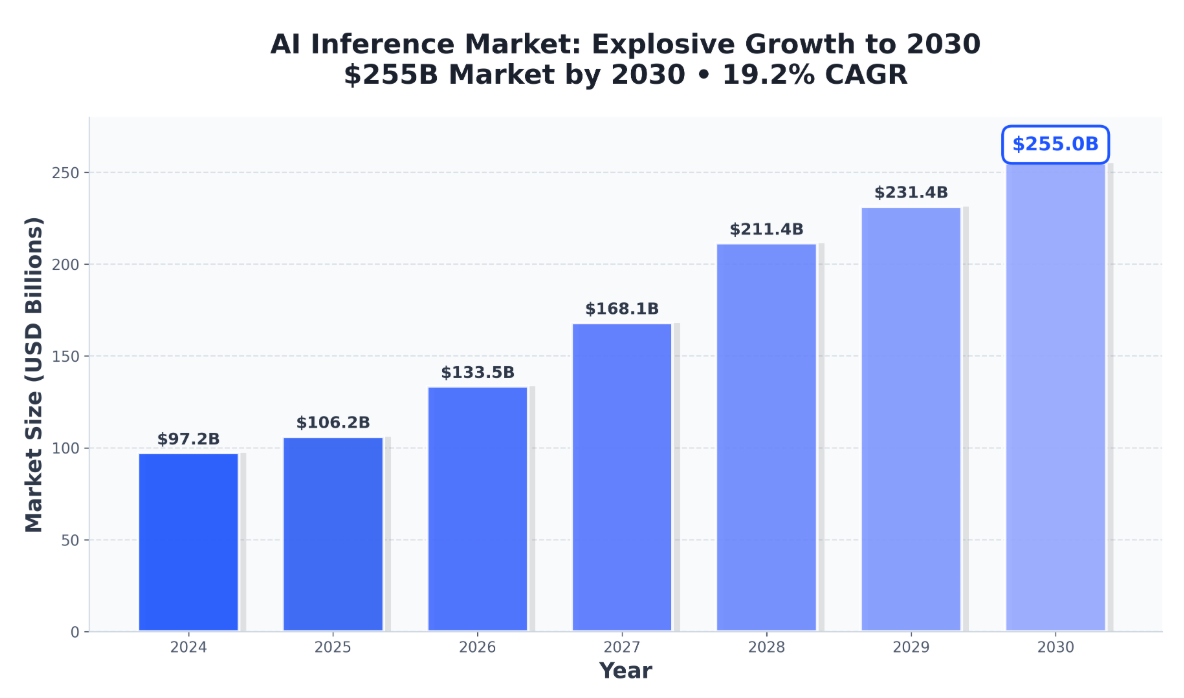

- AI inference will consume 165-326 terawatt-hours annually by 2028, enough to power 22% of all US households

- Data center carbon intensity is 48% higher than the US average, with emissions concentrated in fossil fuel-heavy regions

- Tech giants are committing over $1 trillion in infrastructure spending (OpenAI's $500B Stargate, Apple's $500B, Google's $75B in 2025 alone)

This energy crisis doesn't just impact sustainability goals, it actively prevents companies from scaling AI applications profitably. Today's infrastructure economics won't work at production scale.

Figure 1: AI Inference Market Projected Growth - $97B to $255B by 2030

The Energy Economics Are Broken

According to comprehensive analysis by MIT Technology Review, US data centers consumed 200 terawatt-hours in 2024, this is the equivalent to powering Thailand for a year. AI-specific operations used 53-76 terawatt-hours of this total. The industry now produces 90% of notable AI models, and inference consumes 80-90% of all AI computing power. This represents a complete reversal from when training dominated resource allocation.

The projections are staggering. By 2028, AI inference alone could consume 165-326 terawatt-hours annually. Lawrence Berkeley National Laboratory's December report was blunt: "Data center growth is occurring with little consideration for how best to integrate these emergent loads" into electrical grids.

The implications are severe:

- Variable energy costs per query: Text generation ranges from 0.03 watt-hours (simple queries) to 1.9 watt-hours (complex reasoning). Image generation requires 0.6-1.2 watt-hours. Video generation consumes nearly 1 kilowatt-hour per 5-second clip, over 800× more than high-quality images.

- Hidden carbon costs: The same AI workload in California versus West Virginia produces nearly 2× the carbon emissions due to grid composition. Virginia, America's largest data center hub, relies heavily on natural gas.

- Infrastructure race: Meta and Microsoft are building nuclear plants. OpenAI's Stargate needs 5 gigawatts per data center, more than New Hampshire's entire power consumption.

Harvard's Electricity Law Initiative found that utility deals with tech giants often raise residential electricity rates. A Virginia study estimated ratepayers could pay an additional $37.50 monthly to subsidize data center energy costs.

Figure 2: AI Inference Energy Consumption - Projected 2-4× Growth by 2028

The Real-World Applications Driving Demand

Stanford's 2025 AI Index Report documents AI's rapid integration across industries:

- Healthcare: FDA approved 223 AI-enabled medical devices in 2023, up from 6 in 2015. Medical imaging and diagnostics require real-time processing of massive datasets.

- Autonomous Vehicles: Waymo provides 150,000+ rides weekly. Each requires continuous inference operations.

- Finance: Real-time fraud detection and algorithmic trading where milliseconds equal millions in value.

- Industrial Software: AI adoption expected to double the global industrial software market by 2030, powering everything from predictive maintenance to construction digital twins.

"For any company to make money out of a model that only happens on inference," notes Microsoft researcher Esha Choukse. Every ChatGPT query, recommendation system, and autonomous decision represents an inference operation companies must support at scale.

The Breakthrough: Intelligent Caching Changes Economics

The solution isn't building bigger data centers or waiting for nuclear power. It's making existing infrastructure work smarter through intelligent caching at the inference layer.

Why Caching Transforms AI Inference Economics

Traditional AI inference treats every query as unique, constantly hitting expensive GPU resources. This approach ignores a critical insight: many queries are semantically similar or produce overlapping intermediate computations that could be reused.

Tensormesh's intelligent caching layer recognizes patterns across queries, whether exact matches or semantically similar requests and serves results from cache rather than recomputing from scratch. The impact is immediate:

5-10× GPU Cost Reduction: Companies using Tensormesh see GPU costs drop by 5-10x. For enterprises spending millions on inference, this translates to immediate savings while simultaneously cutting energy consumption proportionally.

Sub-Second Latency: Speed matters in AI inference. Customer service chatbots, financial trading algorithms, and autonomous systems require real-time responses. Tensormesh's optimized routing directs requests to optimal endpoints, achieving sub-second latency that enables entirely new classes of applications.

Deploy in Minutes: Tensormesh integrates seamlessly with your existing AI infrastructure. Deploy new models in minutes, not weeks. No complex setup, no DevOps headaches, just connect your models and start optimizing. Teams focus on building products instead of managing infrastructure complexity.

Real-Time Performance Monitoring: Tensormesh's dashboard provides complete visibility into inference operations, query sources, latency profiles, cache hit rates, and cost metrics. This transparency enables informed decisions about resource allocation and sustainability.

The Math: Energy Savings at Scale

If AI inference will consume 165-326 terawatt-hours annually by 2028, and intelligent caching reduces GPU usage 5-10×, the potential energy savings measure in tens of terawatt-hours, equivalent to millions of homes' annual electricity consumption.

For businesses, the economics are straightforward:

- Reduce infrastructure costs 80-90% while maintaining or improving performance

- Deploy faster with proven integrations to leading AI platforms

- Scale confidently knowing infrastructure can handle exponential growth

- Operate sustainably by dramatically reducing AI's energy footprint

As Stanford's AI Index notes, "The frontier is increasingly competitive." Companies that win won't just have the best models, they'll have the most efficient infrastructure for deploying them.

Get Started with Tensormesh in 3 Simple Steps with $100 in GPU Credits

Step 1: Evaluate your existing inference costs, energy consumption, and throughput limitations. Identify where redundant computations are costing performance and budget.

Step 2: Deploy Tensormesh. Visit www.tensormesh.ai to access our platform. Integration requires minimal configuration. New users can receive $100 GPU Credits to use Tensormesh.

Step 3: Scale with Confidence. Use Tensormesh's observability tools to track cost reductions, energy savings, and cache efficiency. As inference demands grow, Tensormesh automatically optimizes resource allocation for consistent performance at any scale.

Ready to break through the cost and energy ceiling? Visit www.tensormesh.ai to claim your $100 in Free GPU Credits and start optimizing your AI infrastructure.

Sources

- Stanford HAI - The 2025 AI Index Report

https://hai.stanford.edu/ai-index/2025-ai-index-report - MIT Technology Review - We did the math on AI's energy footprint

https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/ - The AIC - Inference in industrials: enhancing efficiency through AI adoption

https://www.theaic.co.uk/aic/news/industry-news/inference-in-industrials-enhancing-efficiency-through-ai-adoption - Grand View Research - AI Inference Market Size, Share & Growth Report

https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-ai-inference-market-report - MarketsandMarkets - AI Inference Market worth $254.98 billion by 2030

https://www.prnewswire.com/news-releases/ai-inference-market-worth-254-98-billion-by-2030---exclusive-report-by-marketsandmarkets-302388315.html - Lawrence Berkeley National Laboratory - United States Data Center Energy Usage Report (December 2024) https://eta-publications.lbl.gov/sites/default/files/2024-12/lbnl-2024-united-states-data-center-energy-usage-report.pdf