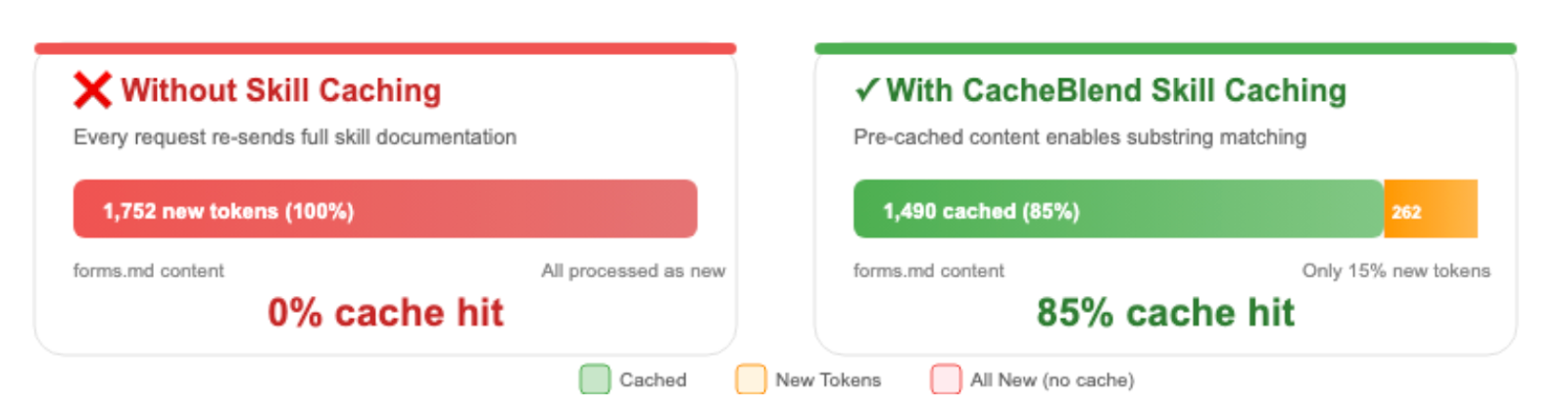

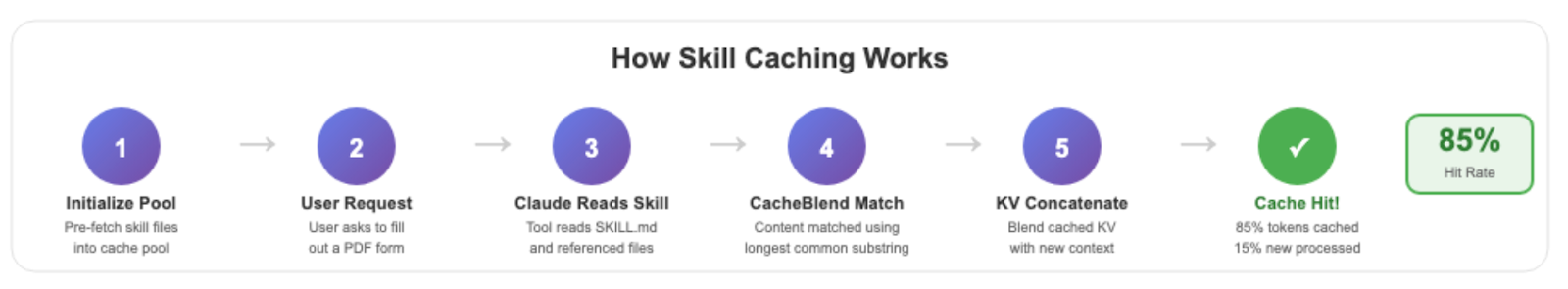

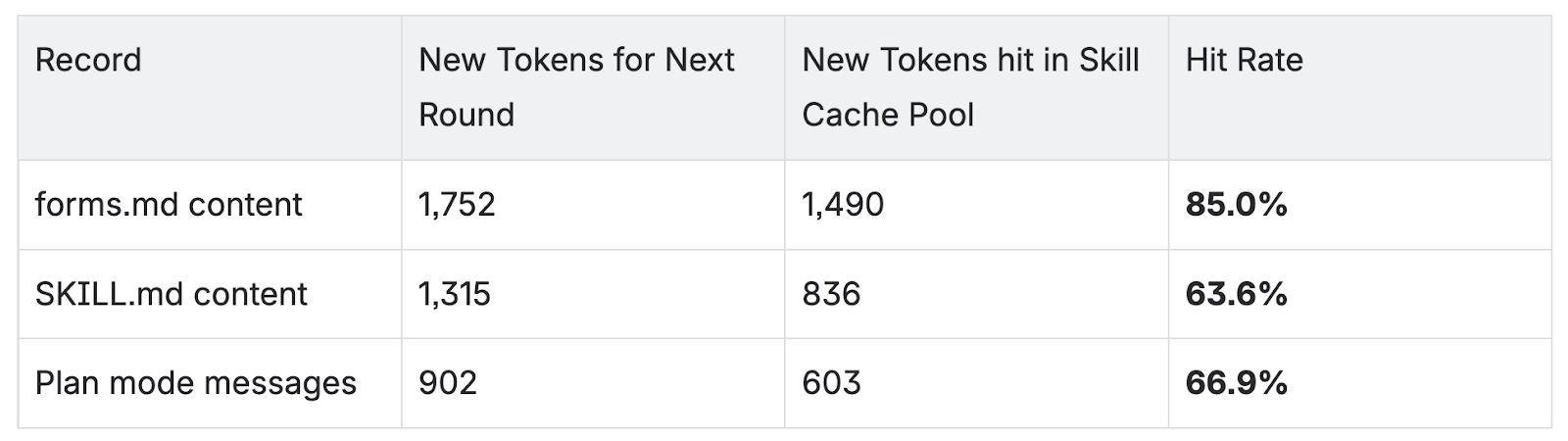

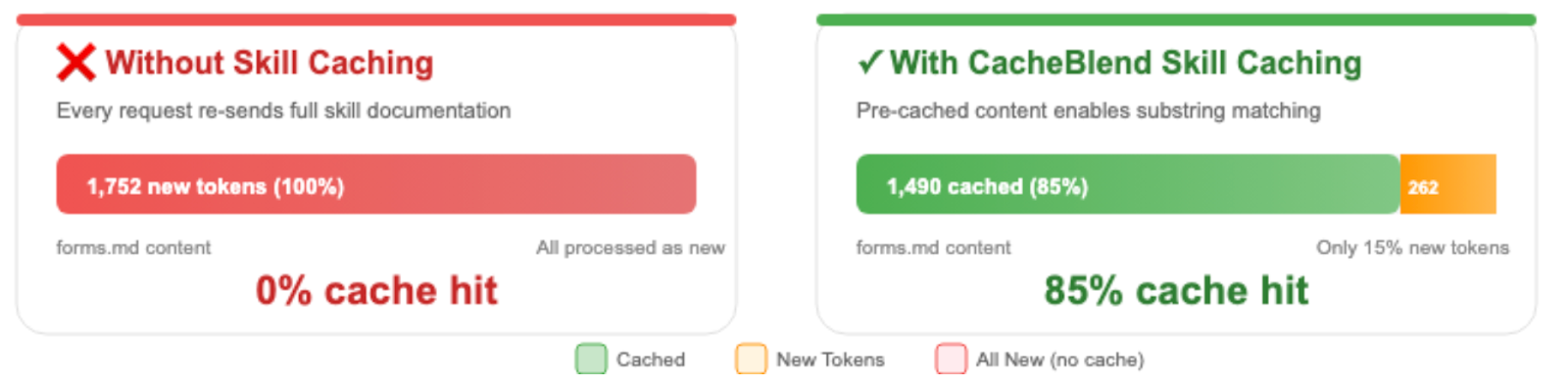

For Agent Skills, traditional prompt caching gives you very small (close to 0%) cache hit rate. We have been working on a non-prefix caching technique, called CacheBlend, with which we achieve 63.6-85.0% cache hit rates on skill-related content, potentially making any agent cheaper to run and delivering answers faster.

Overview

We propose a strategy for caching skills files (like SKILL.md and their referenced files) to enable efficient LLM prompt caching through context editing. By pre-processing and storing skill documentation in the correct format, we can achieve 85%+ cache hit rates on skill-related content.

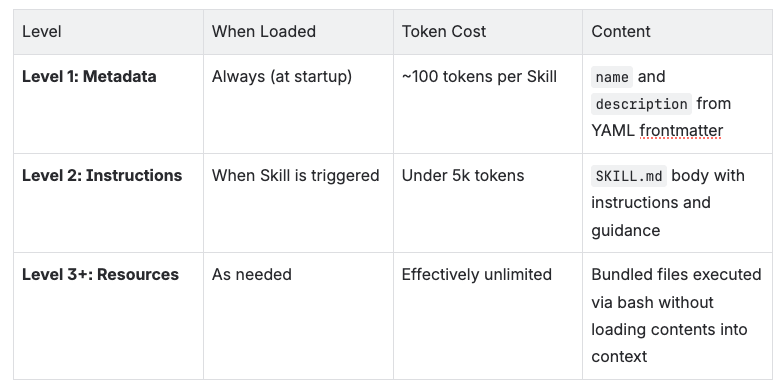

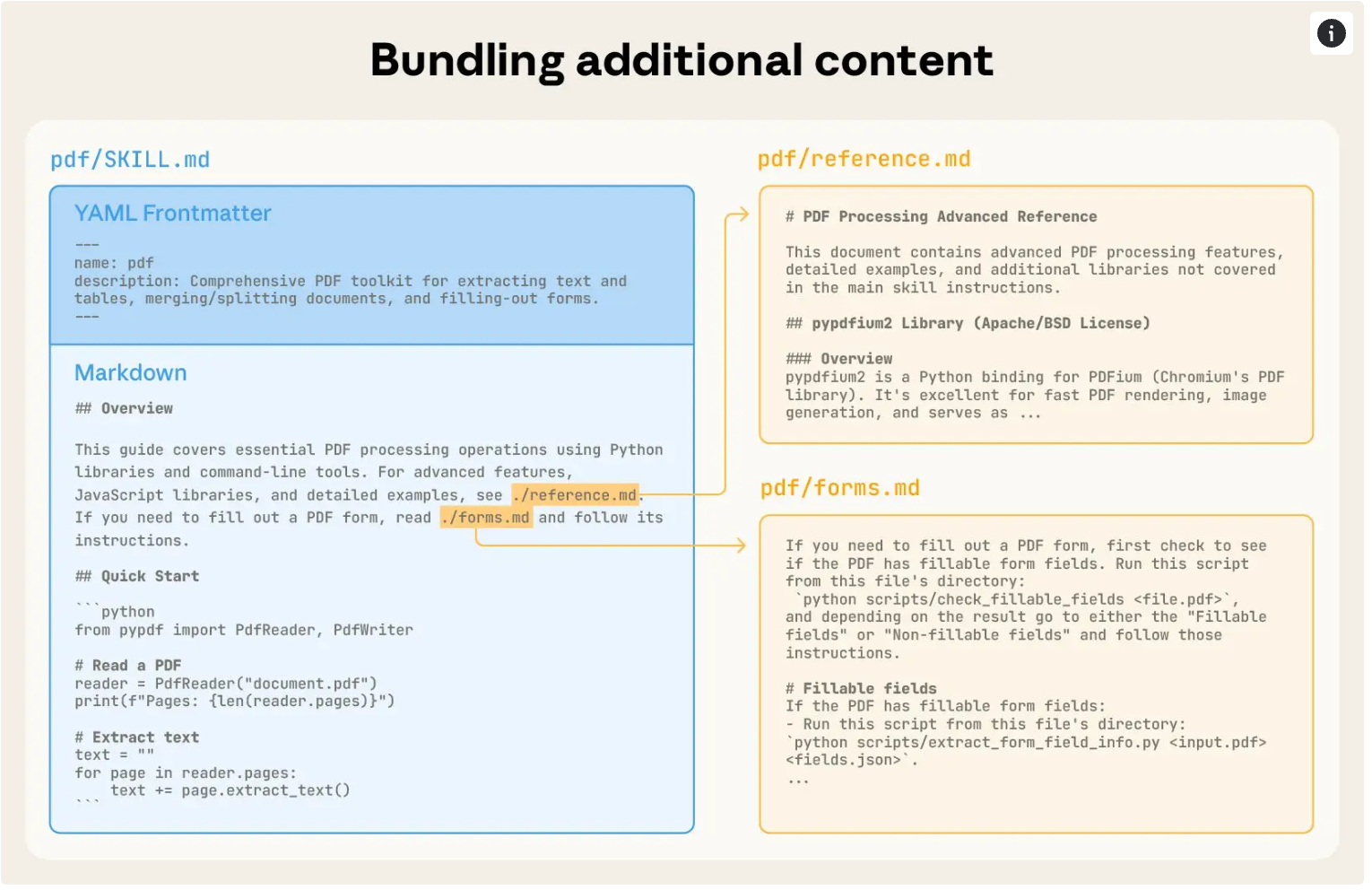

Skill is a progressive disclosure: it provides just enough information in first several lines in skill.md - for Claude to know when each skill should be used without loading all of it into context.The actual body of this file is the second and third level of detail. If Claude thinks the skill is relevant to the current task, it will load them by reading its full instructions SKILL.md & related resources into context.

The actual body of this file is the second and third level of detail. If Claude thinks the skill is relevant to the current task, it will load them by reading its full instructions SKILL.md & related resources into context.

Problem Statement

When LLM agents use skill files (e.g., PDF processing skills), the skill documentation is inserted into the user context. Without proper caching:

- Each request re-sends the full skill documentation

- No prefix matching occurs with previous requests

- Token costs and latency increase linearly

Solution: Pre-cached Skill Pool

Skill Files to Cache

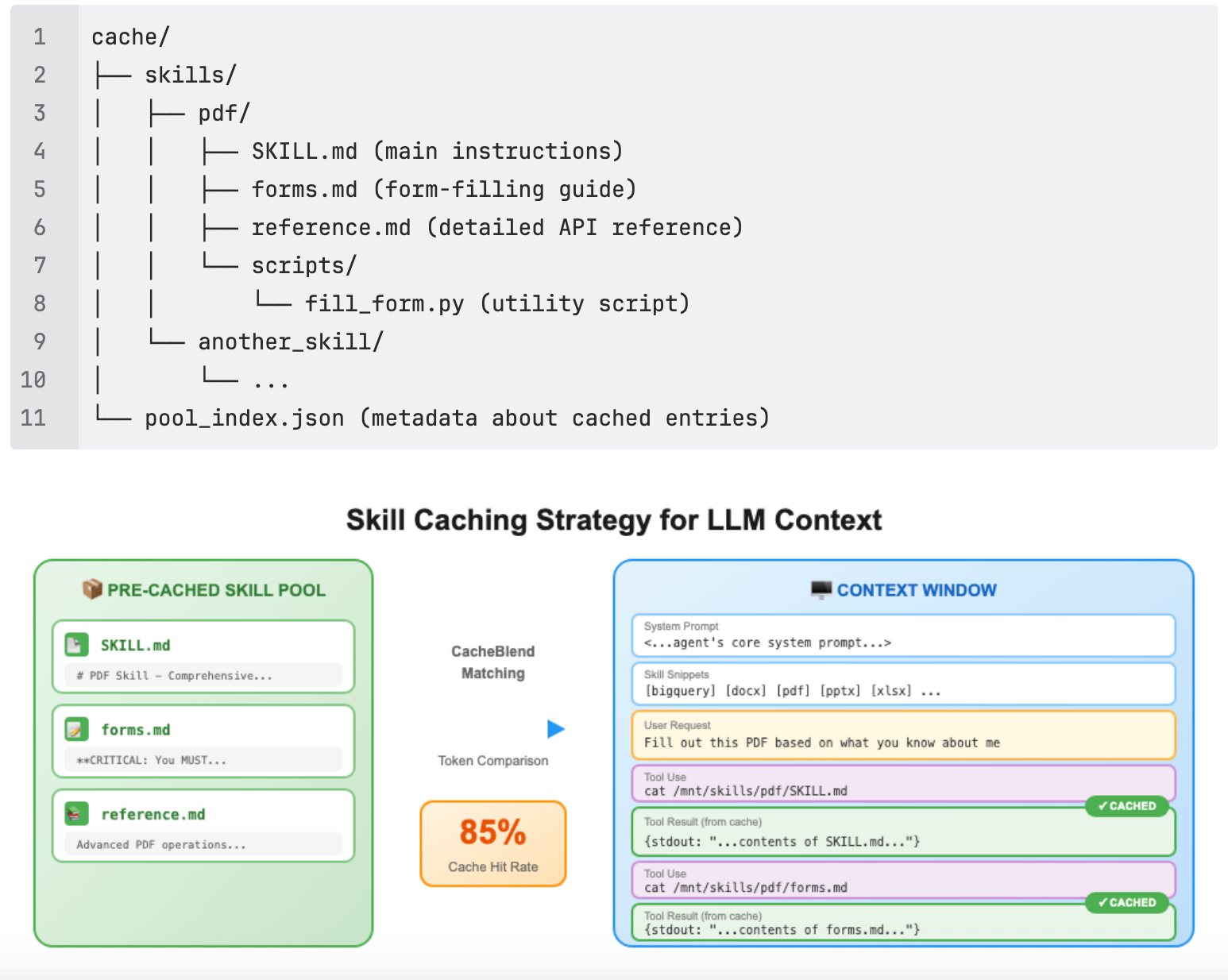

For each skill, cache the main skill file and all referenced files:

Context Editing for Cache Hits

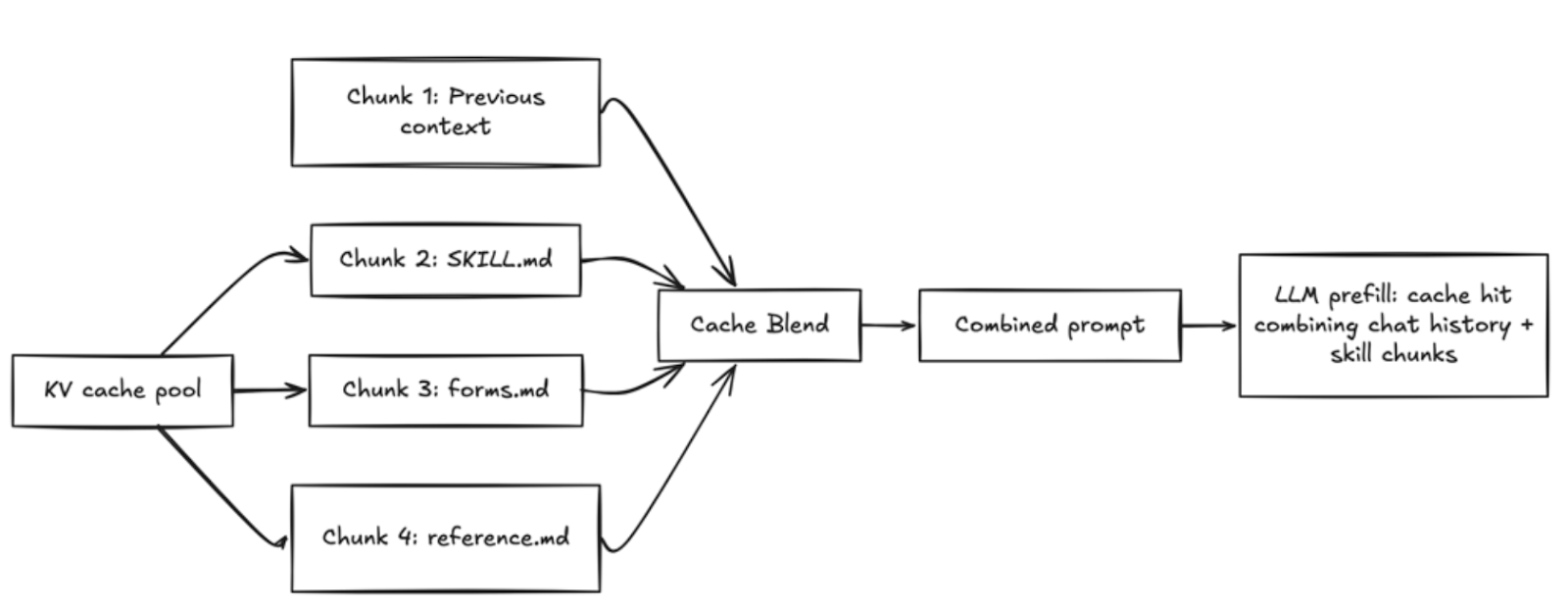

Traditional prefix caching requires skill content to appear at the exact same position in every prompt. However, with CacheBlend, we can concatenate pre-cached skill KV states with the existing context at any position.

Key Advantage: With CacheBlend, the skill content doesn't need to be at the prompt prefix. It can appear after dynamic content (user request, conversation history) and still achieve cache hits by concatenating pre-computed KV states.

Cache Hit Analysis

With proper caching, we observed these results on skill-related requests:

Note: "New Content Appended to Prefix" refers to the total number of words in the new content that was appended to the cached prefix. With CacheBlend, this new content can be placed after dynamic content (like user requests) while still achieving cache hits by concatenating pre-computed KV states. "Matched" represents the number of words from skill files that were found in the cache pool and successfully matched, enabling cache hits.

Benefits

- Reduced Token Costs: 85% of skill content hits cache → only 15% new tokens processed

- Lower Latency: Cached prefixes enable faster response generation

Conclusion

By pre-caching skill files and structuring prompts for prefix matching, we can achieve significant cache hit rates (63.6-85.0%) on skill-related content. This reduces token costs and latency while maintaining consistent skill documentation across requests.