If you're building AI agents, chatbots, or LLM-powered applications, there's one metric that matters more than almost any other for your production infrastructure: KV cache hit rate.

This single number directly determines both your inference latency and your GPU costs. Yet, most teams building AI applications don't optimize for it, or worse, they don't measure it at all. The result? Skyrocketing costs and performance bottlenecks that hold back innovation.

Why KV Cache Hit Rate Is Your Most Important Metric

When a large language model processes text, it stores computation in the form of large tensors called KV cache (Key-Value cache). Think of it as the model's short-term memory. Instead of re-reading and reprocessing the same text from scratch, the model can start generation directly from these stored tensors.

The benefits are enormous:

- Dramatically reduced Time-to-First-Token (TTFT) for queries with repeated content

- 10x cost reduction on cached tokens versus uncached tokens (for example, Claude Sonnet charges $0.30 per million tokens for cached input versus $3.00 for uncached)

- Lower GPU utilization as you're not recomputing what you've already processed

The longer the repeated text, the more computation you save. This is especially critical for multi-modal data like images or videos, where KV caches can represent massive amounts of computation.

The Problem: KV Caches Disappear Fast

Here's where things get challenging. The KV cache lives inside the GPU's VRAM, known for its high-speed memory. However, these caches are large, and VRAM is severely limited. When memory fills up, older caches must be evicted to make room for new ones.

Consider a typical chatbot scenario on a busy system:

- User A sends a prompt and receives an answer

- Multiple other users send requests that get processed in parallel

- By the time User A asks a follow-up question, their original KV cache is gone from GPU memory

- The LLM must re-process all previously processed content again

It's like having a brilliant analyst who forgets everything they learned after each question.

For AI agents, the problem is even more severe. As noted by the team at Manus, agents typically have an input-to-output token ratio of around 100:1, with contexts that grow with every step in the agent loop. Each action and observation appends to the context, creating massive prefill requirements while producing relatively short structured outputs.

The Infrastructure Blind Spot

Teams focused on prompt engineering and model selection often overlook the infrastructure layer where KV cache management happens. Three critical mistakes kill your cache hit rate:

1. Unstable Prompt Prefixes

Due to the autoregressive nature of LLMs, even a single-token difference invalidates the cache from that token onward. A common culprit? Including timestamps precise to the second at the beginning of system prompts. Sure, it tells the model the current time, but it completely destroys your cache hit rate.

2. Non-Deterministic Context

Modifying previous actions or observations, or using non-deterministic JSON serialization (where key ordering isn't stable), silently breaks cache coherence across requests.

3. Cache Eviction at Scale

On busy systems handling multiple users or parallel requests, your carefully built KV caches get evicted before they can be reused. The "short-term memory" vanishes just when you need it most.

Where KV Cache Waste Is Costing You Money

Think about your LLM workload patterns. How often does your application reprocess identical or similar content?

Common scenarios with massive repetition:

- RAG systems: The same documents get added to refine different user queries

- Multi-turn conversations: System prompts and conversation history repeat with every turn

- Batch processing: Processing similar data at predictable times (time-of-day patterns)

- Agent workflows: Identical tool definitions and environment state across iterations

- A/B testing: Multiple variants processing the same base context

If you can identify repetition in your prompts, you're likely wasting 5-10x on GPU costs by not optimizing for cache reuse.

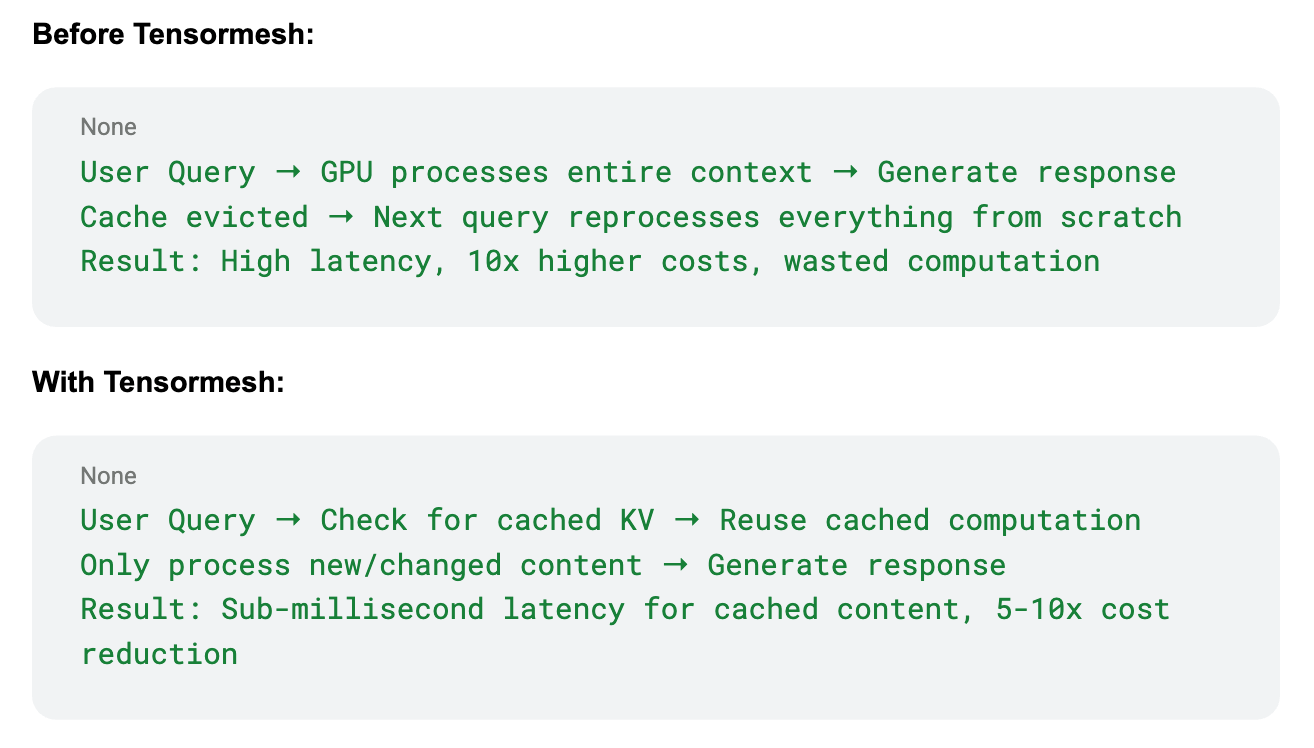

The Tensormesh Solution: Intelligent KV Cache Persistence

This is exactly why we built Tensormesh. While teams at companies like Manus have done brilliant work designing their agent architectures around KV cache optimization, most organizations don't have the time or expertise to manually re-architect their entire system.

Tensormesh automatically handles KV cache persistence and reuse through our integration with LMCache, the open-source library created by our founders. Instead of letting KV caches disappear when they're evicted from GPU VRAM, we store them intelligently in CPU RAM, local SSDs, or shared storage.

What This Means For Your Infrastructure

Tensormesh automatically:

- Identifies repetition in the content sent to your LLM

- Stores KV caches safely where they can be reused minutes, hours, or days later

- Routes requests intelligently to maximize cache hit rates

- Works seamlessly with open-source frameworks

Transform Your AI Infrastructure in Three Simple Steps

Don't let poor cache management hold back your innovation. Getting started with Tensormesh takes just minutes:

Step 1: Access Tensormesh

→ Visit tensormesh.ai and join our beta for free (no credit card required).

For a limited time, we're offering $100 in GPU credits so you can verify the gains with your own workload.

Step 2: Integrate Seamlessly

Tensormesh plugs right into your existing framework. No re-architecture required.

Step 3: Watch Your Metrics Transform

See your infrastructure deliver what it should have been all along:

- ⚡ Sub-second latency for repeated queries

- 💡 5-to-10x reduction in GPU costs

- 🔍 Full observability across every workload

- 🧩 Effortless scaling as your application grows

Your infrastructure should amplify your capabilities, not constrain them. Stop recomputing what you've already processed. Start maximizing your KV cache hit rate with Tensormesh.

References: This article draws insights from "Context Engineering for AI Agents: Lessons from Building Manus" by Yichao 'Peak' Ji, which provides an excellent deep-dive into how production AI agents optimize for KV cache performance.