The Economics and Infrastructure Crisis

The financial reality is harsh, as 95% of companies implementing AI initiatives see no return on investment.

Consider the industry leaders:

- OpenAI lost $5 billion in 2024 despite $3.7 billion in revenue, spending 50% on inference compute costs alone

- Anthropic’s losses reached $5.3 billion in 2024

- The average monthly AI spend rose from $62,964 in 2024 to a projected $85,521 in 2025, reporting a 36% increase

If industry leaders with billions in funding can't achieve profitability, the challenge for ordinary organizations is even more severe.

The root cause? AI workloads inherently involve repetitive patterns, yet traditional inference architectures treat each request as entirely new work, recalculating model activations and intermediate computations from scratch. This redundancy creates compounding costs:

- High-end GPUs like the NVIDIA H100 consume up to 700 watts per unit

- Companies pay full compute costs for work that's already been done

- 21% of larger companies lack formal cost-tracking systems, making optimization nearly impossible

As AI deployments scale, these inefficiencies compound exponentially, forcing organizations to choose between ballooning costs or sacrificed performance.

How Caching Model Memory Transforms Economics

The solution lies in fundamentally rethinking how we approach inference infrastructure. Caching model memory, the intermediate computations and activations that occur during inference represent the next frontier in AI efficiency, delivering greater performance gains than any other optimization technique. Instead of treating each request independently, intelligent memory caching eliminates redundant work automatically.

Tensormesh's Approach to Efficiency

Tensormesh addresses the inference cost crisis through three core capabilities:

Intelligent Model Memory Caching

Tensormesh automatically identifies and caches the intermediate computations and activations through the model's "memory" across inference requests. The platform identifies overlapping computations across queries, from exact matches to partial prefixes.

This approach delivers:

- 5-10× cost reduction by maximizing the reuse of cached model memory

- Sub-millisecond latency for cached query components

- Faster time-to-first-token even on complex, multi-step inference

The impact extends beyond individual requests. As workload patterns emerge, memory cache efficiency improves, creating a compounding effect on both performance and cost savings that surpasses traditional optimization approaches.

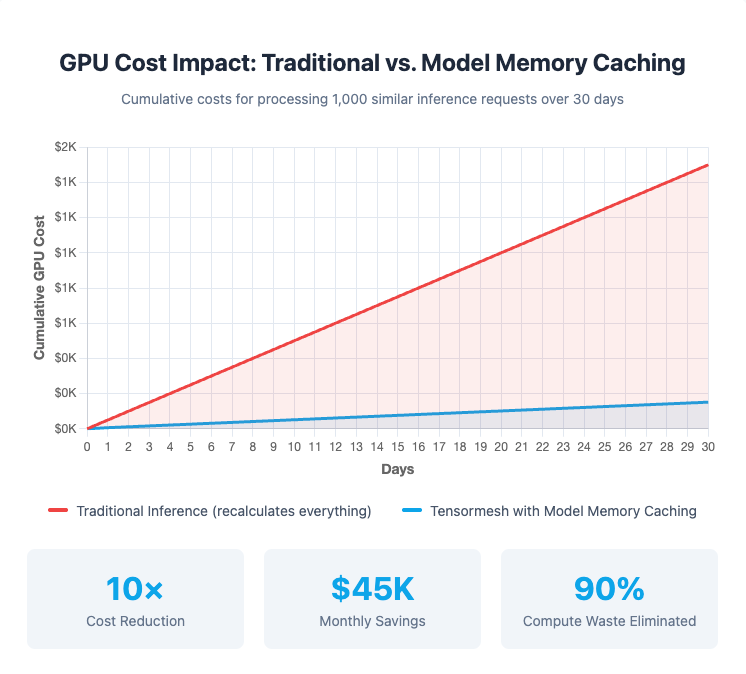

Figure 1: GPU Cost Comparison Over 30 Days

This chart illustrates the dramatic cost difference between traditional inference and Tensormesh's model memory caching. In a typical scenario processing 1,000 similar requests over 30 days:

- Traditional inference (red line) recalculates everything from scratch for each request, resulting in costs that climb to over $50,000

- Tensormesh with model memory caching (blue line) processes the first request normally, then serves subsequent similar requests from cache at just 10% of the cost, keeping total expenses under $5,000

The gap between these lines represents wasted money spent on redundant GPU computations that Tensormesh eliminates automatically. For organizations processing millions of requests monthly, this efficiency translates to hundreds of thousands in savings.

Unified Framework Integration

Rather than forcing infrastructure changes, Tensormesh integrates seamlessly with leading open-source frameworks including vLLM and SGLang.

Organizations can:

- Deploy on existing public cloud infrastructure

- Maintain compatibility with current model architectures

- Integrate in minutes without code refactoring

- Scale across multi-model deployments

This approach eliminates the trade-off between optimization and flexibility.

Complete Observability and Control

Tensormesh provides granular visibility into:

- Real-time GPU utilization and model memory cache performance

- Per-query cost attribution and efficiency metrics

- Workload patterns and optimization opportunities

- Infrastructure health and capacity planning

Teams gain the insights needed to optimize continuously, ensuring AI spending aligns with business value.

The Competitive Imperative

Organizations must move beyond reactive cost management methods and adopt scalable cost visibility systems to control spending, improve efficiency, and maximize ROI. The companies that solve inference efficiency now will have fundamental advantages:

Operational Advantages

- Deploy more sophisticated models within existing budgets

- Scale inference capacity without linear cost increases

- Respond to demand spikes without infrastructure anxiety

- Experiment with new capabilities at lower risk

Strategic Advantages

- Competitive pricing for AI-powered features

- Faster feature iteration and deployment

- Capital efficiency that extends runway

- Infrastructure that scales with business growth

Market Positioning

While competitors struggle with inference economics, efficient organizations can:

- Offer superior service levels at competitive prices

- Invest GPU savings into model quality and capability

- Scale aggressively without burning capital

- Build sustainable AI businesses

Getting Started with Tensormesh

Organizations ready to transform their inference economics can begin immediately:

1. Assess Current State

Evaluate your existing inference costs, GPU utilization, and workload patterns. Identify redundant computations and efficiency gaps.

2. Deploy Tensormesh

Visit www.tensormesh.ai to access the beta platform. Integration requires minimal configuration, most teams are running production workloads within hours.

3. Monitor and Optimize

Use Tensormesh's observability tools to track cost reductions, latency improvements, and model memory cache efficiency. Continuously refine configurations as workload patterns evolve.

4. Scale Confidently

As inference demands grow, Tensormesh automatically optimizes resource allocation, ensuring consistent performance and cost efficiency at any scale.

The Path Forward

The AI inference cost crisis is real, but it's not inevitable. Organizations that address computational efficiency now through intelligent model memory caching, unified framework integration, and comprehensive observability will define the next phase of AI deployment.

The question isn't whether your AI infrastructure can be more efficient. The question is: how much longer can you afford to wait?

Ready to transform your inference economics? Visit www.tensormesh.ai to get started, or contact our team to discuss your specific infrastructure challenges.

Tensormesh — Redefining AI Inference Through Intelligent Efficiency

Sources

- CloudZero State of Cloud Cost Report - https://www.cloudzero.com/state-of-cloud-cost/

- MIT NANDA Report via Entrepreneur - https://www.entrepreneur.com/business-news/most-companies-saw-zero-return-on-ai-investments-study/496144

- Axios coverage of MIT study - https://www.axios.com/2025/08/21/ai-wall-street-big-tech

- The Hill via MIT report - https://thehill.com/policy/technology/5460663-generative-ai-zero-returns-businesses-mit-report/

- CNBC - https://www.cnbc.com/2024/09/27/openai-sees-5-billion-loss-this-year-on-3point7-billion-in-revenue.html

- Where's Your Ed At - https://www.wheresyoured.at/why-everybody-is-losing-money-on-ai/

- Where's Your Ed At analysis - https://www.wheresyoured.at/why-everybody-is-losing-money-on-ai/

- CloudZero cloud computing statistics - https://www.cloudzero.com/blog/cloud-computing-statistics/

- CloudZero State of Cloud Cost Report - https://www.cloudzero.com/state-of-cloud-cost/

- The Decoder - https://the-decoder.com/openai-and-anthropic-lose-billions-on-ai-development-and-operations/

- CloudZero press release - https://www.prnewswire.com/news-releases/cloudzero-survey-finds-engineering-cloud-cost-ownership-equals-better-business-outcomes-302124000.html

- TRGDatacenters - https://www.trgdatacenters.com/resource/nvidia-h100-power-consumption/

- Where’s Your Ed At- https://www.wheresyoured.at/why-everybody-is-losing-money-on-ai/

.png)